Top-level view of one of my node CLI scripts

Table of Contents

- Introduction

- It's just JSON, how hard could it be?

- It's good to seek advice from others

- Where to start? The file system

- So what should this script do?

- Inquirer, but not Commander

- One needs clean data to start with

- Updating the 11ty Bundle source

- Oh, so you want to edit what you've entered?

- But wait, it gets better

- A couple of other scripts to help

- Once more, err, I mean twice more, with feeling

- Time for Commander? Not so much

- Show me the code

- While I have you...

TL;DR: I built a few node CLI scripts to support the management of the 11ty Bundle database.

UPDATE: No sooner had I posted this to the 11ty Discord server than it was pointed out to me that I was using an older version of the inquirer package. Many thanks to uncenter for pointing this out. According to the package author: "Inquirer recently underwent a rewrite from the ground up to reduce the package size and improve performance. The previous version of the package is still maintained (though not actively developed), and offered hundreds of community contributed prompts that might not have been migrated to the latest API." I have made the migration to use the newer @inquirer/prompts package. They are both from the same author and I like the changes that have been made. I have updated the code snippets in this post as well as the code on GitHub to reflect the new package.

Introduction

As you may, or may not recall, the database for 11tybundle.dev site started out as an Airtable base, but then as I neared the maximum number of records allowed in their free tier, I moved it to a Google Sheet. It is a simple 2-dimensional structure, with each row representing one of several types of entries: posts, sites, releases, and starters.

While it lived in a Google Sheet, I created a set of Google Forms for entering the data for each type of the four entries. The result was a Google Sheet with five tabs: one that contained all of the records, and one for each of the entries generated by their respective forms. And, with the help of some AI, I wrote some Google Apps scripts that would move the data from each of the form tabs to the main tab and detect any duplicates. I then created a sixth tab that, when told which was the current issue, would contain the filtered results of records from the main tab that were to be included in the current issue of the 11ty Bundle.

Overall, the system worked ok, but there were some things that it didn't do and some of these caused more pain than others. For example, I had no good way to validate the date format I had entered. So, from time to time, I'd build the site with a bad date. Another example, and this has become a more common issue, I would find a post that I thought I may have seen (and even entered) before. It wasn't until I entered the data and asked for it to be moved to the main tab that a duplicate was detected by the Google Apps script. I had done needless work. These were not insurmountable issues, but they continued to gnaw at me and I knew there had to be a better way.

On the back end of this, using the Google Sheets API, I was able to retrieve the contents of the main tab at build time and format the data into a nice JSON array of objects, each object representing one of the entries.

It's just JSON, how hard could it be?

So, it occurred to me that since this was all in JSON, by the time I processed it with various filters to generate the data to drive the site, I thought Why not just keep it in JSON and edit the entries directly?

It sounds simple enough, right? What were my options that made adding entries simple, since adding entries is most of what I do?

I could just use VS Code and create a snippet with variables for each of the four entry types. Sure, I could, but...I wanted more than the kind of regex-based validation that could be incorporated into a snippet.

But wait, there has to be some kind of customizable JSON editor out in the open source world, right? Well, there are a few, but most of the things I found are generalized editors that rely on JSON schema to provide validation. I really wanted to keep things simple, and that just didn't sound simple...to me, at least.

Ok, then what about building my own custom web-based editor to manage the JSON file. How hard could that be? I would need access to the local file system, which is (as I understand it) not something that is supported directly in the browser.

I could use a framework like Electron to build a desktop app that would have node under the hood, thereby giving me access to the file system. I played with it a while and read a lot of docs and watched a lot of videos. But again, it just seemed like a lot of work for what I wanted to do.

Oh, and there's this thing called Express that also runs on node. I could build an Express server that would serve up the JSON file and allow me to edit it. Again, I read docs and watched videos. I even started down this path by sketching the UI on paper and writing the basic HTML and CSS for the editor to bolt on to Express or Electron.

I have to say that I'm not a fan of frameworks that have so much stuff to wade through to do something that I think should be simple.

I think I've been traumatized by my early professional career of building embedded systems with very limited resources (and writing in assembly language). I prefer to write relatively small amounts of code where I can understand the whole thing. Frameworks ain't that. Maybe that's why I like Eleventy so much.

It's good to seek advice from others

After all this, I decided to reach out to a couple of people in the Eleventy community that I trust and have a lot more web development experience than I do. The suggestions were mixed, but one thing struck a chord. It came from Chris Burnell, and it was a reference to some work that Robb Knight had done to support his blogging workflow (which is a lot more involved than mine). He had built a CLI using the commander and inquirer packages. This sounded like a reasonable path to investigate. Off I went to read the docs and watch some videos.

Where to start? The file system

I had never written any javascript to read from or write to the file system. I thought I should probably start there, because if I couldn't do that, the rest would be a waste of time. I have to say that I thrashed around for a while to get the basics working. I ultimately realized that I was not building some large, scalable system, but a single-purpose, sole-use script. I decided to access the file system using synchronous calls, only needing node's fs. Once I could read the file, add some stuff to it, and write it back out, I was ready to start building the real script.

So what should this script do?

Having nailed down basic file read and write, I thought it would be simplest to start by generating a JSON object for each type of entry. That would require me to make use of the inquirer package to request the data for each part of an entry. They vary a bit, but each has some common info, such as the issue number of the 11ty Bundle blog, a title, and a link. For sites and starters, that's all that was required. For posts, I needed more info (author, date, and categories) and for releases, I needed to add a date to the common info.

After several days, I had the basics working, and that was really cool. It's hard to express how I felt to get the first entry successfully written to the file. I was even able to validate several of the fields: testing that the date is in the right format and is not later than today, and check to see if the URL is already in the database; and with inquirer, I can select categories from a pre-defined list as checkboxes. It's all pretty amazing, IMO (perhaps I'm too easily amazed).

Inquirer, but not Commander

Initially, I took a look at commander, and it seemed like a little more than what I needed. Having said that, now that I've built several different scripts using inquirer, I can imagine using commander for selecting which of the scripts to run by way of a command line option. But that's for another day...first things first.

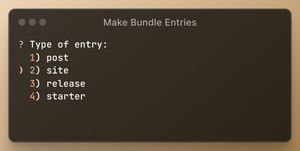

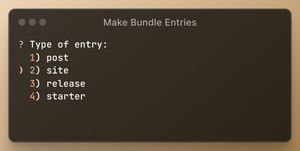

This view of the CLI is from the top level of my "Make Bundle Entries" script.

The opening presentation of the Make Bundle Entries script

The inquirer package generates it with very little code:

const entryType = await rawlist({

message: "Type of entry:",

choices: [

{ value: "post" },

{ value: "site" },

{ value: "release" },

{ value: "starter" },

],;

switch (entryType) {

case "post":

await enterPost();

break;

case "site":

await enterSite();

break;

case "release":

await enterRelease();

break;<>

case "starter":<>

await enterStarter();

break;

default:

console.log("Invalid ENTRY type");

return;

}With that, each of those "enter" functions prompt for the data required for that type of entry (each of them using inquirer too). Pretty amazing, eh?

Well, that got me started on a multi-day journey. I'm not that skilled in javascript, so I will admit that I used some AI along the way. I used it more as a tutor, asking it questions more than asking it for code. For example, I'm finding it a great alternative to going to MDN to look up how a particular array function works.

One needs clean data to start with

One of the things that I had the Google Apps script do is make a backup JSON file and store it in my Google Drive each time I copied the form data tabs to the main tab. I downloaded the latest one and realized that it needed some cleaning. As it turns out, there was some extraneous cruft at the end of some of the entries. Fortunately, said cruft was ignored by my build process. But, I wanted to clean it up...nothing a few find/replace operations couldn't handle.

One of the things that I had forgotten about was that the categories field of the post entries were stored as a string of comma-separated values and not an array of strings. I had corrected for this in one of my build filters. It made sense to get rid of that filter and adjust the data accordingly. Again, not very difficult.

Updating the 11ty Bundle source

I managed to use use the Google Sheets API in a pretty contained way. I had a single file, called fetchsheetdata.js in my _data directory. Once fetched, another data file slices, dices, and filters the JSON, generating the objects for most of the templates that drive the site. To incorporate the use of the JSON file directly, all I had to do was replace a call to the fetching function with a reference to the JSON file. In other words, the heart transplant was pretty straightforward and the patient survived.

Oh, so you want to edit what you've entered?

Let's just say that the great feeling of getting something new working simply doesn't last long enough. I started using my new toy and realized that I would occasionally make a mistake, but I could not edit what I had just entered even though it was just displayed in front of me. I'd have to open the file in VS Code, scroll to the end and delete the last entry, run the script again, and re-enter all the data with the correct info. I had to add an edit feature.

It sounds simple enough. I'd have to generate a prompt after the initial entry and then ask what do to next. I came up with three possible next steps: (1) save the entry and exit, (2) save the entry and add another (perhaps of a different type), or (3) edit what I had just entered.

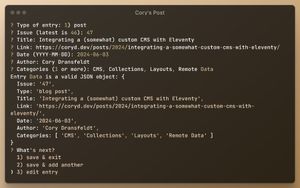

Here's what that looks like for one of Cory Dransfeldt's recent post entries. I enter the data, show the resulting validated JSON object, and then ask what to do next.

I cannot tell you how much I love this workflow for creating entries.

A terminal view of entering a post and the follow-up questions

But wait, it gets better

On top of this, another aspect of what makes this workflow so awesome is my recent migration from Alfred to Raycast. Both of them work as a launcher, snippet generator, and all-around workflow supporter. Raycast is a Mac-only utility that does a lot of what Alfred does, and a lot more.

The first time I heard of Raycast was from Robb Knight's blog. He's written a few posts - here, here, and here - about his thoughts on Raycast. Robb really does a lot to infect people...and I mean that in a good way.

So how does this help my CLI workflow? With Raycast, I can create something called a Script Command that will open a terminal and run my node script, prompting me for an entry type. I can give it an alias and then bring up Raycast and start typing the alias...or, better yet, I can assign it a hotkey and no matter where I am on my Mac, that hotkey will launch my script in a terminal window and ask what kind of entry I want to add. That capability along with the clipboard history (which Alfred also has) makes this workflow so much more efficient. I can find a blog post, copy the URL, title, and author, hit the hotkey, paste those things in, add the few other tidbits and I'm done. Is that sweet or what?

A couple of other scripts to help

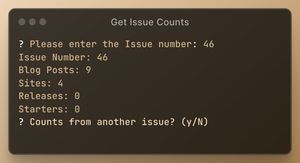

Once I got the hang of this, I realized that I needed a way to find out how many of each type of entry were in the database for the next blog post. So I wrote a simple script to prompt me for an issue number and it spits out just what I need, like this:

Show me the item counts for a given issue

Another script lets me check a URL to see if it's already in the database. While it does that check when I make an entry, if I have a sense that I may have seen the post before, it makes no sense to enter several items and then find out that it's a duplicate. With this script, I can do a simple check before spending more time on it. It looks like this:

Check for a URL in the database

Once more, err, I mean twice more, with feeling

Once I had the file updating with new entries, there was a single step remaining. I needed a way to push the repo to GitHub to trigger a new site build on Netlify.

Raycast to the rescue. I have a script command that does the miraculous push. So once I've added a few entries and want to get them on the site (for all you Firehose RSS subscribers), I'm just a hotkey away from getting it done.

I'd almost forgotten another script that I wrote. It goes through the entire JSON file and verifies that all of the links are still accessible. Sometimes, sites are abandoned or URL structures are changed, and I need to find those and fix them, if possible. For those URLs that are no longer accessible, the script writes them out in an HTML file with anchor links so that I can manually re-test them. The method I'm using relies on the axios package and I'm not sure it gives sites enough time to respond. So some of the links it says are not accessible may be just fine. But, it's a start and I can improve the technique over time.

Time for Commander? Not so much

Now that I have these things running, I might consider trying out commander to see if I can make a single script that will run any of the scripts I've written. But since it's only a handful and they're hotkey accessible, it's not a priority. I've got some other ideas for making the site better for the visitors. And with this under-the-hood developer experience work, it might free up some time to make that happen.

While this has been a very enjoyable, if at times frustrating journey, I have learned a lot and that is part of what keeps me going. That and the amazing Eleventy community. I'm so glad to be a part of it.

Show me the code

If you're really interested in seeing the code for these scripts, they're on GitHub. Be warned, they're not very well documented. The make-bundle-entries script might look particularly hairy. I'm not a professional web developer and I'm sure there are things that could be done better. They work for me and that's what counts.

While I have you...

If you're interested in the 11ty Bundle, it's got a boatload of free resources to help you with your Eleventy journey. You can subscribe to the Firehose RSS feed to get every post that I add to the site. You can also subscribe the the 11ty Bundle blog RSS feed. And if you'd rather get the 11ty Bundle blog posts via email, subscribe here. Lastly, you can send me an email with any questions or comments.

[OK, I'm done proof-reading this post. It's time to publish it.]

- Previous post: My hearing loss journey...consider a cochlear implant?

- Next post (in time): Got RSS Feeds?